Learning a new language is hard even under the best circumstances—it takes commitment and patience to make progress! At Duolingo, we spend a lot of time and effort making sure that your time and effort is put to good use. To make sure that every minute you spend on Duolingo brings you closer to your goal, we are constantly working with our learners (yes, you!) to find ways to improve our courses, both big and small. Here are a few of the ways that you help make the Duolingo experience both fun and effective!

User experience research helps us understand what learners think of new features

As we develop new ideas and features for Duolingo, we want to make sure that we hear what our learners think of them. One of the ways we do this is through User Experience (UX) research, which involves engaging directly with our learners to understand their needs, attitudes, behaviors, and motivations.

UX research takes many forms: we might send out a survey to hundreds or thousands of learners to learn about attitudes at scale, or we might invite a handful of people to sit down with us for one-on-one interviews to hear more about how they use a specific feature.

For example, through a series of interviews, we learned more about how Duolingo learners think about the mistakes they make while learning a language. We found that learners wanted to know what mistakes they’ve made in past lessons, and wanted the opportunity to practice their “weak areas” so they could improve. One learner shared her particular frustrations: “Why do I keep making these mistakes? I'm eternally asking, Wait, what are the forms for this tense?” That got us thinking…

The research was the inspiration for a new subscription feature called the “Practice Hub.” Practice Hub provides a central place for learners to revisit previous mistakes with targeted practice, and also provides specific practice lessons for speaking and listening skills. This is just one example of how Duolingo is constantly shaped by candid input from our learners!

Small-scale experiments with learners help us test-drive new features

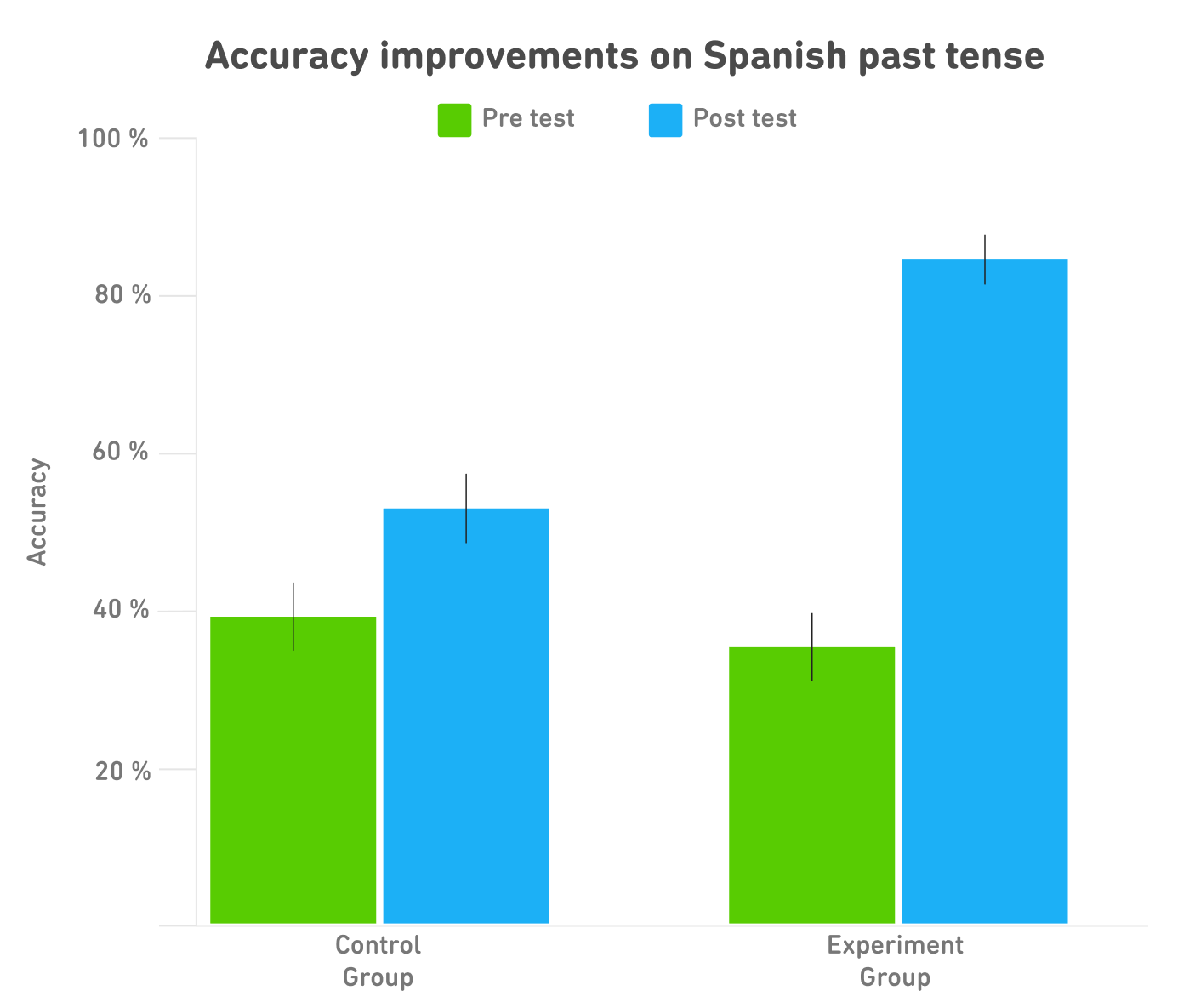

We’re constantly testing new ways to teach language in our courses. To understand how new course features impact learning, we use small-scale, targeted, controlled experiments. For example, we ran an experiment to see how effective grammar lessons were at teaching learners how to talk about events in the past (such as “they traveled”), using the Spanish preterite tense.

We recruited Duolingo learners from our Spanish course who hadn’t encountered the preterite yet in lessons, and who said they didn’t know much Spanish before starting the course. This gave us more confidence that any improvements we saw would be due to what they learned in the app. These learners were randomly assigned to one of two groups. One group (the "control" group) was introduced to the topic through our regular lessons, where we teach new grammar topics like the preterite alongside vocabulary related to a theme, like “travel”. The other group (the "experimental" group) was introduced to the topic through specific grammar lessons that focused just on the preterite, with no other theme. These grammar lessons also included minimal explicit instruction, such as verb conjugation tables.

Both the control group and the experimental group were tested before (pre-test) and after (post-test) these lessons, and comparing the pre-test and post-test scores allowed us to measure how much the two groups learned. Both groups of learners made fewer grammatical mistakes after the lessons, which tells us that both types of lessons were effective. But grammar lessons were more effective: the group who learned through regular lessons improved their accuracy from 40% to 54% (an increase of 14% on average), but those who learned through grammar lessons improved their scores from 36% to 86%—a whopping 50%! These kinds of data from our learners help us see that a new feature is on the right track to enhance learning.

Large-scale experiments help us evaluate new features

Once a feature has been developed, even if learners have told us it delivers a great experience and we have initial evidence that learners benefit from the feature, our work doesn’t stop. We spend a lot of time understanding how millions of learners interact with Duolingo: what features you use, which ones you don’t, and whether or not it seems like you’re mastering language concepts. We do this by launching new features through A/B tests. This means we launch the feature to a subset of learners to see how it impacts their experience, compared to another subset of learners who don’t have the new feature. This is one reason why everyone’s Duolingo app is a little different, even if you’re practicing the same language as a friend!

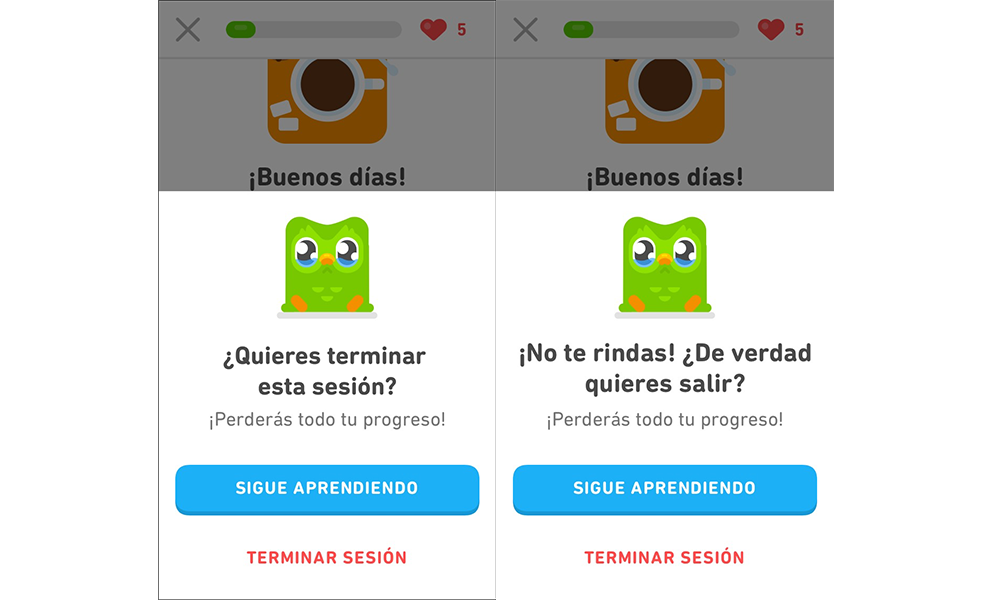

For example, it is very important that we translate instructions on Duolingo really clearly across languages. As we test various translation options, you and your friends might see different messages at the end of a session.

At any given time, there are hundreds of experiments testing a variety of features and messages meant to help make learning more fun and effective. This means that right now, you are very likely part of several experiments that make your Duolingo experience unique compared to other learners, and every lesson you complete gives us valuable data about what’s working, and what isn’t!

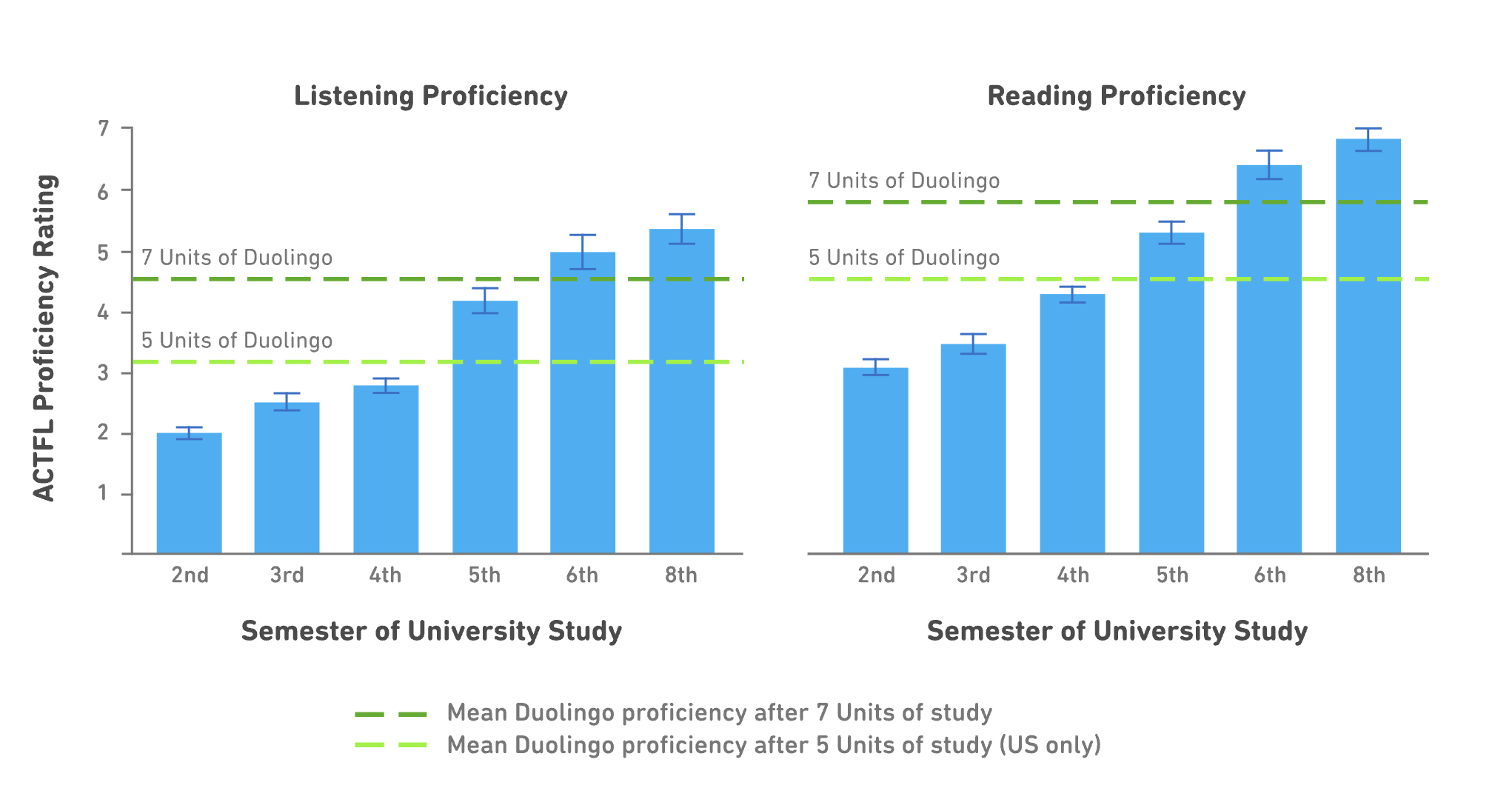

Efficacy studies help us assess learners’ outcomes in our courses

Finally, we also work hard to make sure that Duolingo courses improve learners' overall language proficiency. We run research studies and collaborate with university researchers to assess how well our learners are acquiring particular skills, like reading, listening, and speaking. This research confirms that our courses are effective learning tools, according to standardized language tests. In a pair of recent studies, we found that learners who completed five units of their French or Spanish course on Duolingo performed as well on reading and listening tests as students who had completed four semesters of university language study! We also saw that learners who completed seven units on Duolingo scored as well as students who completed five semesters of study!

These types of efficacy studies help us to continually evaluate our own teaching methods, and ensure that our lessons are fun and effective.

Thanks for your help!

At Duolingo, we are committed to delivering the best language education in the world. To achieve this goal, we rely on the most valuable part of Duolingo: you, our learners! Whether it’s through UX interviews, controlled experiments, in-app testing, efficacy studies, or one of the many other methods in our toolbox, we are constantly working to make Duolingo both effective and delightful. So keep your eyes open, you may get an email from us to help out! And if you do, know that you’re making language education better for everyone.